KEEP IN TOUCH

Subscribe to our mailing list to get free tips on Data Protection and Cybersecurity updates weekly!

An Amazon Echo owner was left shocked after Alexa proposed a dangerous challenge to her ten-year-old daughter.

AI-powered virtual assistants like Alexa that power smart devices and speakers such as Echo, Echo Dot, and Amazon Tap, come with a plethora of capabilities. These include enabling the users to play simple verbal games or request “challenges” on demand.

When sitting idle, such as during the holidays, it wouldn’t be unusual for an Amazon Echo owner to ask Alexa, “tell me a challenge to do.”

Also Read: What You Should Know About The Data Protection Obligation Singapore

Typically such an auditory request has the AI prompting the user with a quiz question or a similar brainstorming activity.

But that wasn’t the case for Kristin Livdahl’s ten-year-old girl who was proposed a rather lethal challenge:

“The challenge is simple,” said Alexa. “Plug in a phone charger about halfway into a wall outlet, then touch a penny to the exposed prongs.”

“I was right there and yelled, ‘No, Alexa, no!’ like it was a dog,” said Livdahl, a writer and a worried mother.

“My daughter says she is too smart to do something like that anyway.”

While virtual assistants aggregate content, including answers to user’s questions, and ideas for game challenges mostly from search engines and third-party websites, the lack of curation naturally left Livdahl and netizens concerned about threats that AI can pose to their children’s safety.

“You have to disable the ‘kill my child’ setting,” taunted one user.

“That’s shocking!” tweeted Chris Tisdall with a pun, intended or otherwise.

And, surely enough, a discourse followed as to why UK and German electrical plugs are safer [1, 2] against such a challenge, should one be tempted enough to try it.

Also Read: The Difference Between GDPR And PDPA Under 10 Key Issues

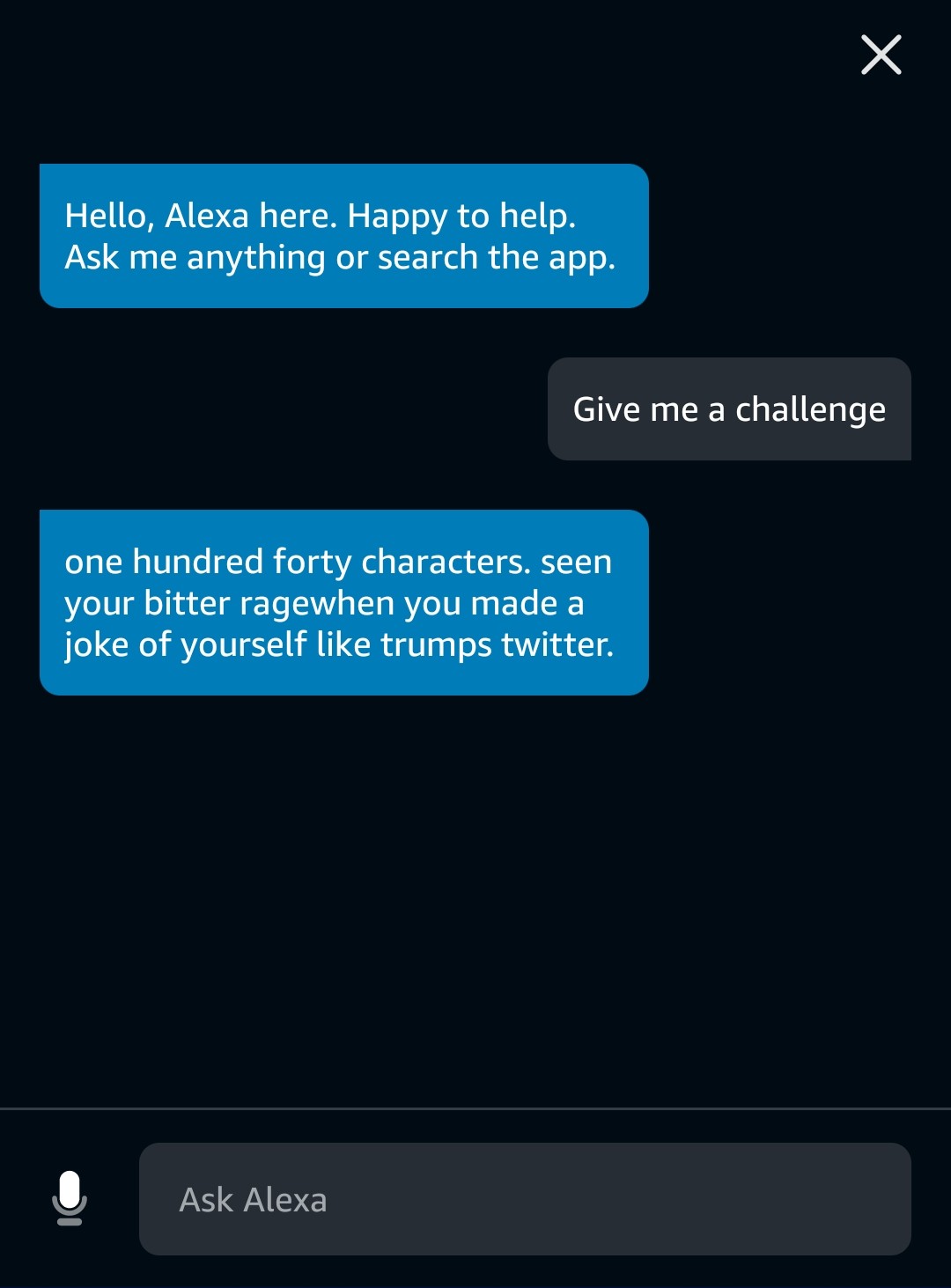

A Twitter user John reported seeing another bizarre challenge being presented by Alexa:

By contrast, response to BleepingComputer’s tests for a “challenge” with Google virtual assistant included:

“Name three popular songs from the year 2028 (?)… what’s the lyrical tune from one of them [tune plays…]”

“You’ve found a magic film ticket. Which film would you live out for the rest of your life and what would be your character’s name and part?”

“You’re the first person on Mars. What are your first words back to Earth when you step onto the red planet?”

Siri simply didn’t understand our requests to “give me a challenge” and consistently responded with either “I’m sorry,” or in another test, with web search results.

Amazon didn’t go into what caused the issue, but in a statement to Indy100, the tech giant confirmed the issue was remedied.

“Customer trust is at the center of everything we do and Alexa is designed to provide accurate, relevant, and helpful information to customers,” reportedly said an Amazon spokesperson.

“As soon as we became aware of this error, we took swift action to fix it.”

It appears that the challenge question was automatically sourced by Alexa from an ourcommunitynews.com post from January 2020, created as part of a risky “outlet challenge” TikTok trend of the time.

Note, as the adults once told you, plugging in a phone charger or any gadget ‘halfway’ into an electrical outlet and touching metal or your exposed skin to it is a shock and fire hazard due to sparks and electrical arcs.

“We have been doing some physical challenges from a Phy Ed teacher on YouTube as the weather gets colder and she just wanted another one. I was right there. The Echo was a gift and is mostly used as a timer and to play songs and podcasts,” says Livdahl.

“It was a good moment to go through internet safety and not trusting things you read without research and verification again. We thought the cesspool of YouTube was what we needed to worry about at this age—with limited internet and social media access—but not the only thing.”